Why AI Regulatory Compliance Matters More Than Ever

AI regulatory compliance is the essential process of ensuring artificial intelligence systems adhere to laws, industry rules, and ethical standards. For any business using AI, it’s no longer optional.

The stakes are high. With 73% of businesses using AI, the pressure to innovate is immense. However, 78% of consumers believe organizations must ensure AI is developed ethically. Failing to do so can lead to fines of up to €35 million or 7% of global revenue, severe reputational damage, and a loss of customer trust.

Key Compliance Pillars:

- Major Regulations: EU AI Act, GDPR, NIST AI RMF, ISO/IEC 42001.

- Core Requirements: Risk assessments, data governance, transparency, human oversight, and robust documentation.

- Business Impact: Avoid massive fines, protect your reputation, and build customer trust.

- Action Steps: Establish a governance framework, conduct risk assessments, implement monitoring, and train your team.

The costs of non-compliance are real. Regulators have already issued multi-million euro fines for data privacy violations related to AI. In the US, the SEC has fined firms for misleading AI claims. Compliance protects you from financial penalties and legal scrutiny while building the trust needed to maintain a competitive edge.

This guide breaks down what you need to know about AI regulatory compliance—from understanding global regulations to building a strategy that protects your business while enabling innovation.

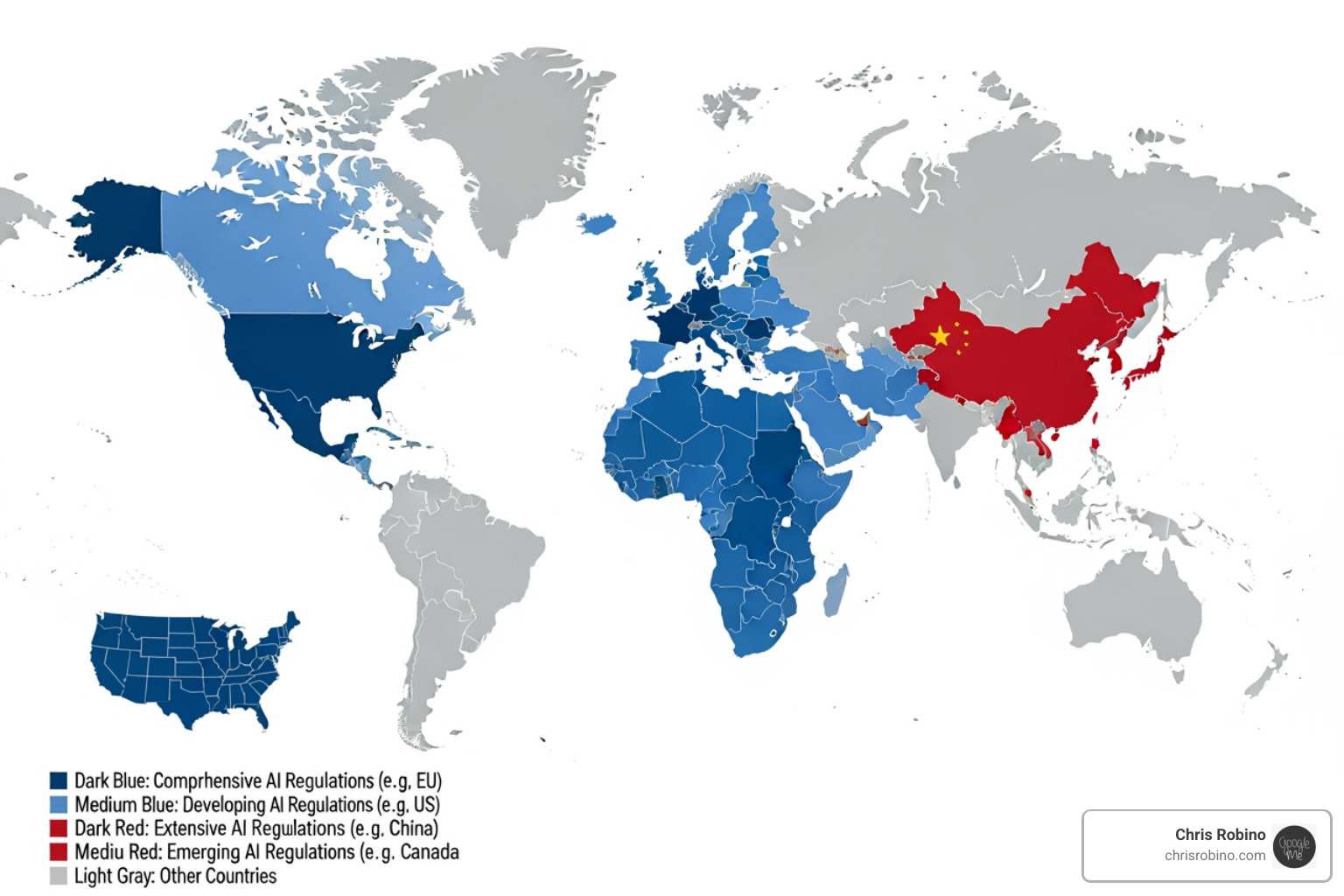

Navigating the Global AI Regulatory Compliance Landscape

The world of AI regulation is a complex and shifting landscape. Lawmakers are working to establish rules that protect people while enabling innovation, creating a patchwork of requirements for businesses operating across borders. Understanding this environment is key to deploying AI responsibly.

Key Global Regulations and Frameworks

Several key frameworks are shaping global AI compliance:

-

The EU AI Act: As the world’s first comprehensive AI law, the EU AI Act takes a risk-based approach. It bans “unacceptable risk” AI (like social scoring), places strict requirements on “high-risk” systems (used in employment, credit, etc.), and mandates transparency for “limited-risk” systems like chatbots. Fines for non-compliance can reach €35 million or 7% of global turnover.

-

The United States Approach: The US uses a combination of executive orders and agency guidance. The NIST AI Risk Management Framework offers voluntary but influential guidance on managing AI risks, while the Blueprint for an AI Bill of Rights outlines principles for ethical AI. Federal agencies and individual states are also developing their own rules.

-

International Standards: Voluntary standards like ISO/IEC 42001 (AI Management Systems) and ISO/IEC 27001 (Information Security) provide practical frameworks that help businesses demonstrate compliance with various regulations.

Other nations, including China and Canada, are also implementing their own rules, forcing global companies to steer a complex web of overlapping requirements for data privacy, algorithmic fairness, and transparency.

The High Stakes of Non-Compliance

Ignoring AI regulatory compliance can be catastrophic. The consequences are severe and multifaceted:

-

Financial Penalties: Beyond the EU AI Act, regulations like GDPR carry fines up to €20 million or 4% of global turnover. Regulators are actively enforcing these rules, with penalties in the tens of millions already levied for violations like scraping photos for facial recognition databases without consent.

-

Legal and Regulatory Scrutiny: Non-compliance invites audits, investigations, and class-action lawsuits. AI systems found to be biased in areas like hiring or lending can trigger civil rights violations. High-risk AI systems may even be pulled from the market entirely.

-

Reputational Damage: Public trust evaporates when AI is seen as unfair, biased, or invasive. With 78% of consumers demanding ethical AI, a compliance failure can become a global news story overnight, leading to customer churn and long-term brand damage.

-

Operational Setbacks: Non-compliant systems create technical debt, requiring expensive re-engineering. Delays, internal resistance, and the need to retrain models can halt innovation and allow compliant competitors to pull ahead.

Building a Robust AI Compliance Strategy

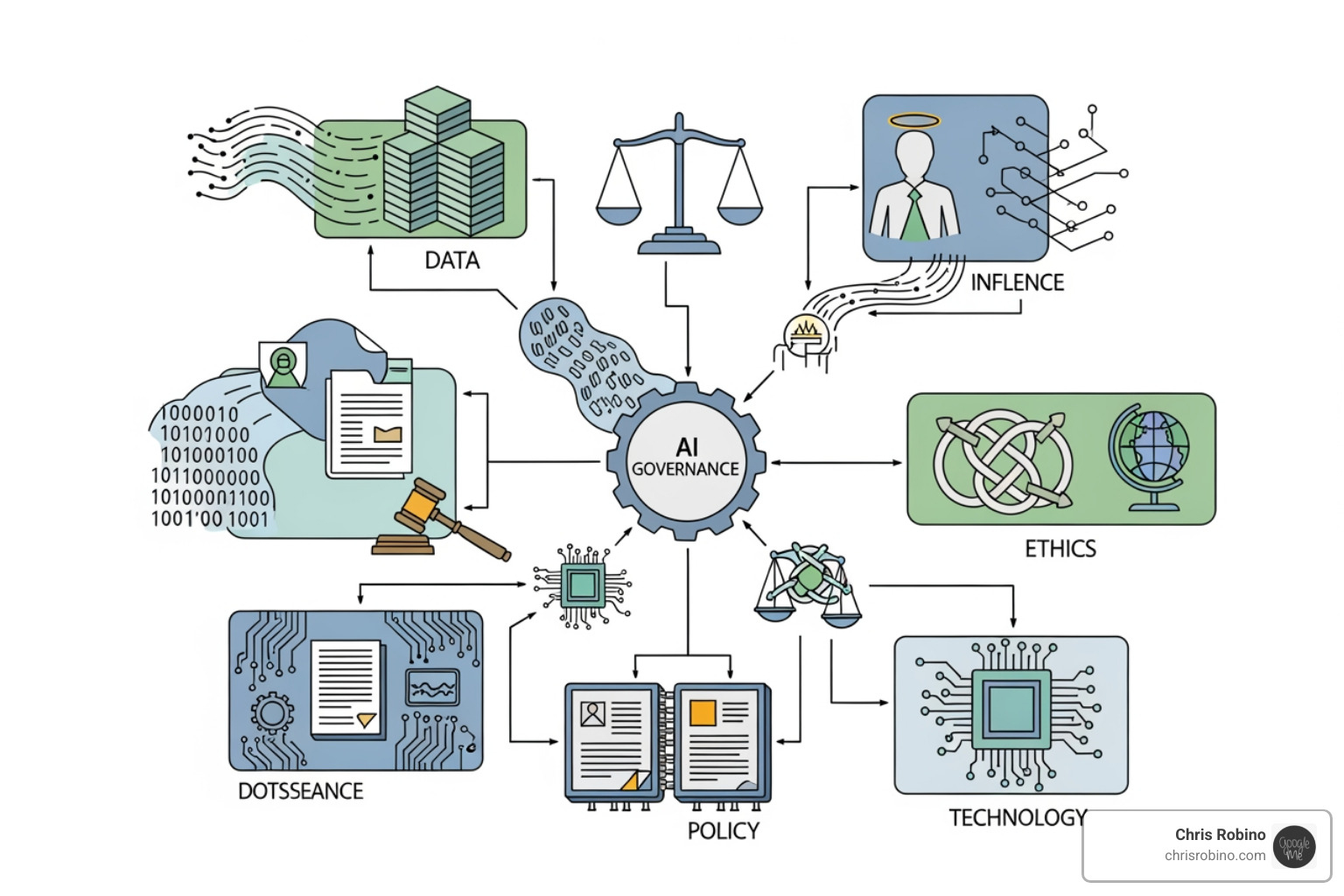

A strong compliance strategy embeds responsible AI practices into your organization’s DNA. It’s about being proactive, not reactive.

-

Establish AI Governance: Create clear internal policies and define roles and responsibilities. Make compliance a shared duty across legal, security, engineering, and business teams.

-

Conduct Proactive Risk Assessments: Continuously assess for bias, security vulnerabilities, and privacy risks throughout the AI lifecycle, from design to deployment.

-

Ensure Data Quality and Governance: Use accurate, unbiased, and legally acquired data. Implement policies for data minimization and secure storage.

-

Prioritize Transparency and Explainability: Document how AI models work and be prepared to explain their decisions, especially for high-risk systems. AI-powered analytics can help make systems more understandable.

-

Implement Human Oversight: Design systems with meaningful human review, especially for critical decisions. Ensure there are clear paths to appeal or override AI determinations.

-

Monitor and Audit Continuously: Use automated tools to monitor model performance, security, and compliance in real time to catch issues before they escalate.

-

Integrate Ethical AI Development: Build fairness, accountability, and user well-being into the development process from the start.

-

Maintain Robust Documentation: Keep detailed records of models, data, risk assessments, and compliance checks to demonstrate due diligence to regulators.

The Future of AI in Governance and How to Stay Ahead

The world of AI regulatory compliance is constantly evolving. The organizations that thrive will be those that use AI not just as a product to be regulated, but as a tool to stay compliant and competitive.

Future Trends and the Evolving Role of Compliance Professionals

The compliance landscape is shifting from a defensive posture to a strategic one. Forward-thinking companies are embedding governance into AI systems from day one, a philosophy known as “responsible by design.”

This shift is creating new roles like AI Ethics Officers and dedicated governance teams staffed by professionals with hybrid skills in technology, law, and data science. The tools are also evolving, with quantitative risk models replacing subjective checklists to measure potential harm with greater precision.

We’re also seeing greater collaboration between business and government, as companies help shape practical, workable regulations. For compliance professionals, continuous learning is essential to keep pace. This proactive stance is paying off, as 80% of C-suite executives now view investment in responsible AI as a competitive advantage that builds trust through ethical AI development.

Leveraging AI for Improved Regulatory Compliance

AI is not just the subject of regulation; it’s also a powerful tool for managing it. Companies are using AI to fight complexity with intelligence.

-

Automate Tedious Tasks: AI can handle evidence collection and map controls across multiple frameworks (ISO 27001, GDPR, etc.), freeing up teams to focus on strategic decisions.

-

Enable Real-Time Monitoring: Instead of finding issues months later, AI-powered systems can watch for control failures or model drift the moment they happen, enabling a proactive response.

-

Generate Actionable Insights: AI-powered analytics can sift through vast compliance data, spot trends, predict risks, and present findings in intuitive dashboards.

-

Accelerate Policy and Control Management: Generative AI can help draft compliance policies and check existing controls against new regulations, dramatically speeding up response times.

By using AI-driven business solutions, companies can transform compliance from a cost center into a strategic advantage. The future of AI regulatory compliance is about using AI to achieve both innovation and responsibility simultaneously.

If you’re ready to turn compliance into a strategic advantage, get expert guidance on emerging tech strategy and let’s build a responsible and innovative future together.