Why Ethical AI Development is Critical for Building Trustworthy Technology

Ethical AI development is the practice of creating AI systems that prioritize fairness, transparency, accountability, and human well-being. As AI becomes more critical for business, responsible development practices are more urgent than ever.

Key Principles of Ethical AI Development:

- Fairness: Avoid discriminatory biases in AI systems.

- Transparency: Make AI decision-making understandable and explainable.

- Accountability: Establish clear responsibility for AI outcomes.

- Privacy: Protect personal data with robust governance.

- Safety: Rigorously test AI for reliability and security.

- Human Oversight: Maintain human control and intervention capabilities.

AI built on biased data can cause significant harm, especially to underrepresented groups. Conversely, ethical AI builds public trust, reduces risks, and creates sustainable technology that augments human intelligence.

As Chris Robino, I’ve spent over two decades helping organizations steer digital change and AI implementation with integrity. My expertise in ethical AI development comes from helping companies integrate responsible practices from the ground up, aligning AI systems with both business goals and human values.

A Practical Framework for Ethical AI Development

Building AI systems that serve humanity requires a solid framework. Here are the essential pillars for making ethical AI development practical and sustainable.

Mitigating Bias and Ensuring Fairness in AI Systems

AI systems learn from data, and historical data is often filled with human bias. Training AI on this flawed data teaches it to perpetuate unfairness. For example, a prominent AI recruiting tool was scrapped after it was discovered to penalize resumes from female candidates. Similarly, a study from MIT and Stanford found commercial AI systems had far higher error rates for darker-skinned women than for lighter-skinned men.

To fix this, ethical AI development requires rigorous data audits to find and correct historical biases. Using fairness metrics helps measure and track bias during development. Crucially, diverse development teams are more likely to spot potential issues before they cause real-world harm.

Building Transparent and Explainable AI (XAI)

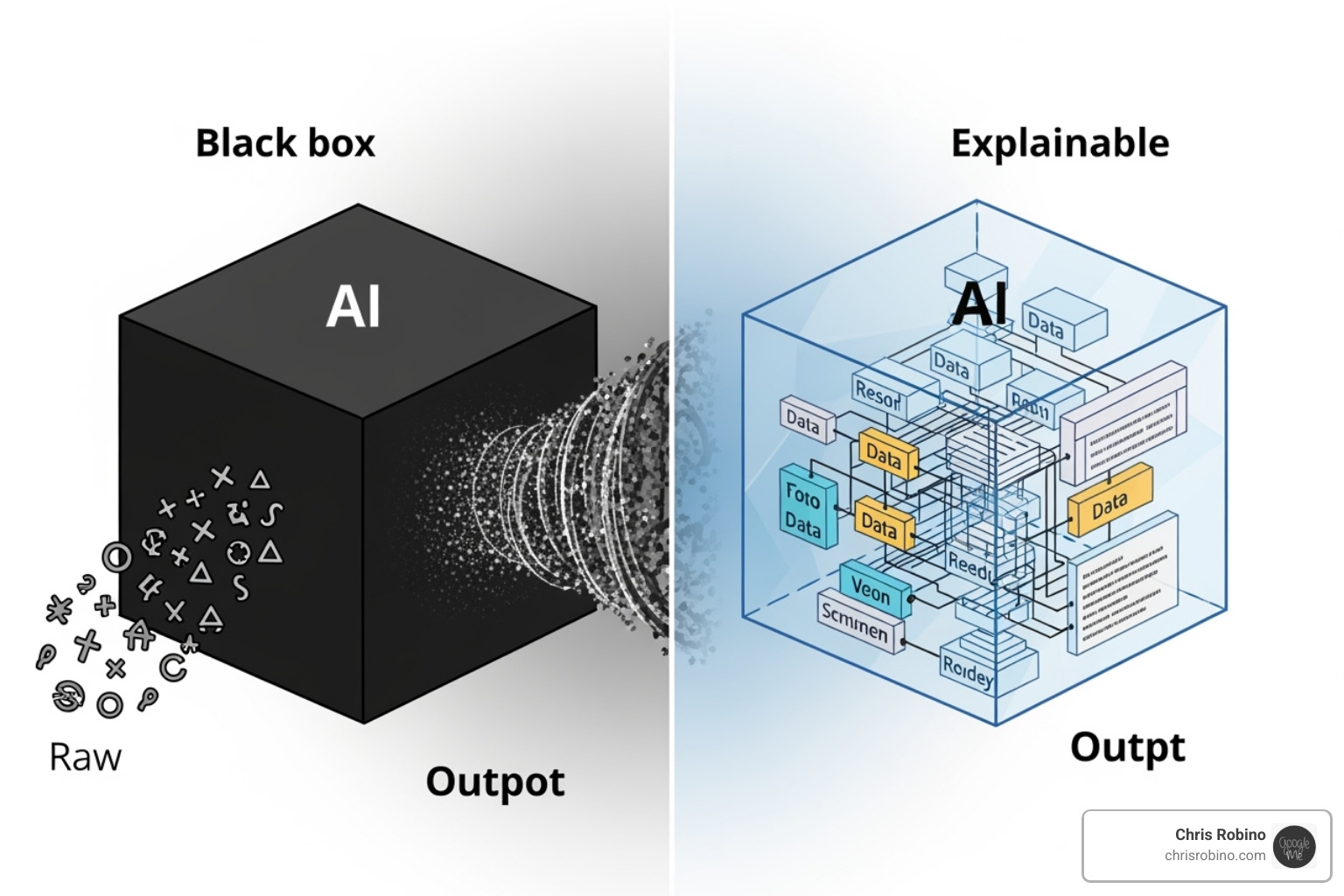

When an AI system makes a life-altering decision, “the computer said so” is not an acceptable explanation. This “black box” problem erodes trust and makes it impossible to verify or improve AI decisions. Users lose confidence, regulators can’t ensure compliance, and developers can’t fix bugs.

Explainable AI (XAI) is the solution. It involves building systems that can explain their reasoning in human-understandable terms. Techniques like LIME and SHAP help us look inside the black box, revealing which factors influenced a decision. The goal is to make the decision-making process understandable and justifiable, especially for high-stakes applications, fostering trust and collaboration between humans and AI.

Safeguarding Privacy and Data Security

AI’s hunger for data creates a tension with privacy rights. Ethical AI development requires building powerful systems while respecting privacy. This starts with privacy by design, making data protection a core architectural feature from the beginning.

Effective data governance is crucial, with clear policies on data collection, use, and retention. Key principles include data minimization (collecting only necessary data) and anonymization to protect individuals. User consent must be meaningful, giving people clear information and control over their data. Frameworks like the EU’s GDPR offer a blueprint for building AI systems that people can trust with their personal information.

Establishing Accountability and Robust Human Oversight

When an AI system fails, who is responsible? Ethical AI development demands clear lines of accountability. Responsibility frameworks should map out who is accountable at each stage of the AI lifecycle, from development to deployment.

Human-in-the-loop systems are essential for high-risk applications, ensuring meaningful human control. Humans must be able to monitor, intervene, and override AI decisions that conflict with ethical guidelines. Comprehensive risk assessments and safety testing must evaluate not just technical failures but also potential social impacts. This approach augments human intelligence while keeping humans in control of critical decisions, creating AI that is both powerful and trustworthy.

Implementing and Scaling Responsible AI

Moving from principles to practice requires embedding ethical considerations into your organization’s daily operations. This means making ethical AI development a core part of your culture.

Practical Steps for Integrating Ethical AI Development

Integrating ethical AI is a continuous journey. Here are key steps to make it a reality:

- Establish an ethical charter: Create a living document that defines your organization’s core values for AI. This charter should guide real-world decisions.

- Form diverse teams: Include ethicists, social scientists, and people from various backgrounds alongside engineers to challenge assumptions and spot blind spots.

- Conduct impact assessments: Use Ethical Impact Assessments (EIAs) as an early warning system to identify potential risks and unintended consequences before deployment.

- Implement continuous monitoring: Treat AI ethics like security—a subject of constant vigilance. Regularly audit systems and incorporate user feedback to maintain trust as AI evolves.

Fostering a culture of responsibility makes ethics everyone’s job. Invest in AI ethics education and encourage open collaboration among all stakeholders to build AI that serves society’s needs.

The Future of AI Ethics: Emerging Trends and Global Standards

The AI landscape is constantly evolving, bringing new ethical challenges. Technologies like generative AI and deepfakes blur the line between authentic and artificial content, creating risks of misuse and disinformation. The solution is to build in transparency, such as clear disclosure for AI-generated content.

Environmental sustainability is another emerging ethical concern. Large AI models consume vast amounts of energy, so ethical AI development must now include optimizing for energy efficiency and using renewable energy.

As AI enters critical sectors, the need for global standards is growing. While universal legislation is still developing, frameworks like UNESCO’s Recommendation on the Ethics of AI provide a human-centered blueprint. This standard emphasizes human rights, inclusivity, and environmental well-being, principles I incorporate into my guidance for organizations.

Staying ahead of these trends is key to building AI that aligns with our future values. For organizations ready to steer this landscape, learn more about content marketing strategies for emerging tech to see how ethical considerations can drive both innovation and trust.

Conclusion: Our Commitment to AI with Integrity

AI holds immense power to reshape our world, which is why ethical AI development is not just an option but a necessity. Ethics is not a barrier to innovation; it is the foundation for creating technology that works for everyone.

By tackling bias, we build fairer systems. By prioritizing transparency, we earn trust. By safeguarding privacy, we protect what matters most. And by establishing accountability, we create a stable foundation for innovation.

This path requires collaboration between developers, leaders, policymakers, and users. It demands a culture of continuous learning with ethics at the core of every decision.

I am optimistic about our AI-driven future. With integrity as our guide, we can ensure technology genuinely improves lives. This is the work that drives me: helping organizations turn ethical challenges into opportunities for lasting, positive impact.

If your organization is ready to accept ethical AI development and align your technological advances with the highest standards, I can help you steer the path forward. Explore my approach to consultant content marketing and let’s discuss how to articulate your commitment to responsible AI.

The future of AI is in our hands. Let’s build it right.